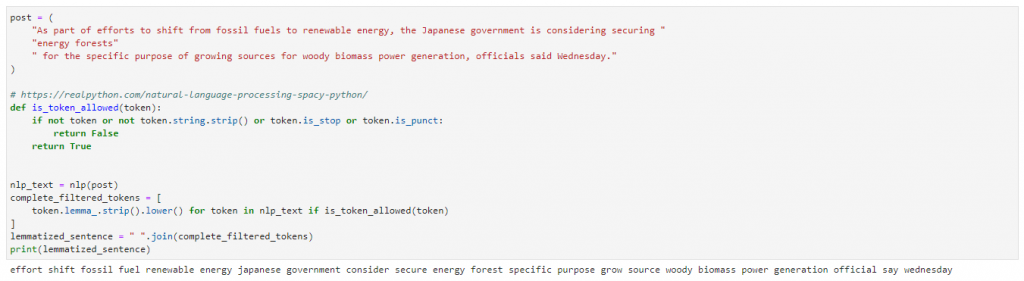

Luckily, there are NLP libraries that make the analysis of verb morphology (= the study of the internal structure of verbs, e.g. Restricting ourselves to domain events for now, that means, that we have to ensure in post-processingĪ) that all orange sticky notes contain a past-tense verb andī) that stickies containing past-tense verbs are orange Removing less informative words like stopwords, punctuation etc.

#Spacy clean text how to#

I am trying to wrap my head around how to do proper text pre-processing (cleaning the.1 answer Top answer: What you are doing seems fine in terms of preprocessing. These conventions sound simple, but once participants are “in the flow”, they sometimes have a hard time sticking to them. I am fairly new to machine learning and NLP in general. Domain events are described by a verb in past tense (e.g., “placed order”) and commands, as they represent intention, in imperative (e.g., “send notification”).

On the other hand, the verb form is crucial. We represent domain events with orange sticky notes, while commands are written on blue ones. Event Storming uses a very specific colour-coded key. To address questions like these, it is essential that specific DDD concepts are represented correctly by the sticky notes.

#Spacy clean text install#

To download and install Poetry run: make poetry-download To uninstall. Makefile contains a lot of functions for faster development. spacy-cleaner -help or with Poetry: poetry run spacy-cleaner -help Makefile usage. poetry add spacy-cleaner Then you can run. Who was the most active participant in a specific bounded context? pip install -U spacy-cleaner or install with Poetry. How are they distributed across the timeline?Īre there domain events that occur several times? Create topics and classifying spanish documents using Gensim and Spacy. Possible questions that are worth exploring during the post-processing of Event Storming sessions include: with a total of 570GB of clean and deduplicated text processed for this work. In this blog post, I will show you which text preparation steps are necessary to be able to analyse sticky notes from event stormings and how this analysis can be carried out with the help of the spaCy library. In a previous blog post, we discussed how to extract data from Miro Boards. These tools should help extract information from event stormings for further analysis.

We’ve also included beautifulsoup as a failside/fallback function.Within the framework of our Innovation Incubator, the idea arose to develop some tools to facilitate the post-processing of Event Storming sessions conducted in Miro. Hopefully you can now easily extract text content from either a single url or multiple urls.

#Spacy clean text code#

The following code can be used to perform this task. Humans have amassed seemingly endless amounts of text over the.

#Spacy clean text pdf#

# Remove any tab separation and strip the text:Ĭleaned_text = cleaned_text.replace('\t', '')ĭef extract_text_from_single_web_page(url):ĭownloaded_url = trafilatura.fetch_url(url)Ī = trafilatura.extract(downloaded_url, json_output=True, with_metadata=True, include_comments = False,ĭate_extraction_params=") We will first load the PDF document, clean the text and then convert it into Spacy document object. Heres how spaCy, an open-source library for natural language processing, did it.

The pipeline will take the raw text as input, clean it, transform it, and extract. # Then we will loop over every item in the extract text and make sure that the beautifulsoup4 tag In this chapter, we will develop blueprints for a text preprocessing pipeline. Soup = BeautifulSoup(response_content, 'html.parser') It can be used to build information extraction or natural language understanding systems, or to pre-process text for deep learning. This is a fallback function, so that we can always return a value for text content.Įven for when both Trafilatura and BeautifulSoup are unable to extract the text from a spaCy is designed specifically for production use and helps you build applications that process and understand large volumes of text. Def beautifulsoup_extract_text_fallback(response_content):

0 kommentar(er)

0 kommentar(er)